SHAREPOINT 2013 – DISTRIBUTED CACHE AND NEWSFEED QUESTIONS & ANSWERS

- Can the cache be interrogated to obtain names of users that have posted and details of data?The cache cannot be interrogated to obtain names of users that have posted and other details of the data.

- Are one Distributed Cache hosts the main one and the other a standby or do both serve feeds?Both the Distributed Cache Servers serve feeds. It is for load balancing purpose and not for high-availability.

- What happens if one host goes down or is rebooted without warning? Will I lose feeds?The feeds will not be lost, they are stored in the database.

- Is this issue due to using dedicated mode opposed to collocated?When a new post is created, the content of that post is added to a profile property called SPS-StatusNotes to the Microfeed list on the user’s Mysite and then to the Distributed Cache.

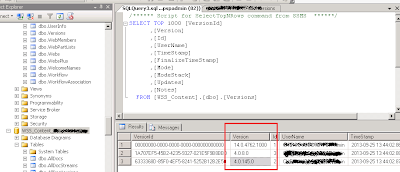

- What is the best way to view new posts successfully added?Use SQL Profiler trace which reveals that SharePoint updates the SPS-StatusNotes property using a Stored Procedure called dbo.profile_UpdateUserProfileData in the Profile Database.

- Where are Newsfeeds stored?

The Microfeed list on a user’s My Site is the ONLY location where newsfeed posts are permanently stored. When a user creates a Newsfeed post it will create a new entry list item within the Microfeed list. The list items in the Microfeed list will contain the content of the Newsfeed posts as well as other metadata about the post. - How can I get a newsfeed onto my web page?

The Newsfeed web part loads the data from the AppFabric Cache and shows the data on the users mysite. - What happens when a user follows a tag?

When a user follows a tag, this job will update the distributed cache to surface the change in the news feed. - How often are the newsfeeds updated and what is the process?

By default, this job will run once every 10 minutes. If examining this job in the ULS Viewer, creating a filter where Name contains “ActivityFeedJob” The feed job processes profile changes that have occurred since the last time it ran. Each time the job processes changes, it updates ActivityEventsConsolidated and ActivityEventsPublished tables with a new time stamp. Each time the job begins; it reads the old time stamp from these tables and uses it to query the UserProfileEventLog table of the profile database. After identifying the changes from the UserProfileEventLog, the job processes the changes themselves. Processing changes essentially means updating the Microfeed list with new list items, and adding data to the AppFabric cache, according the profiles privacy settings. - How are the newsfeeds repopulated if a failure occurs?

The timer job named Feed Cache Repopulate Job repopulates the feed cache (also known as Distributed Activity Feed LMT Cache). LMT stands for Last Modified Time. The internal name of this job isUPA_Title_LMTRepopulationJob. The default schedule of this job is once every five minutes. If you are using ULS Viewer to examine this timer job, enter a filter where Name contains “LMTRepopulationJob”. If you want to determine if repopulation was needed and if it succeeded, use a filter where EventId contains “aipj”. When the Feed Cache Repopulation Job starts, the job will issue an isRepopulationNeeded request to FeedCacheService.svc. IsRepopulationNeeded will check for a key within the DistributedActivityFeedLMTCache (App Fabric) and if it exists, will return true. - How long should it take to repopulate the feeds?

The time it takes to repopulate the data depends on a lot of factors like the size of the feed cache, architecture of the SharePoint farm, resources being used during the time when the repopulate job runs. Therefore a time can not be determined. - What is the LMT cache?

The LMT cache holds feed related metadata, like post count. The default repopulation of this cache is done by the Feed Cache Repopulation job. The Update-SPRepopulateMicroblogLMTCache will refresh the cache for the entire farm. In the event the cache loses all of its data, this command may be resource intensive.

Thanks to this Source URL

Comments

Post a Comment